Artificial Intelligence

Hey all. Let's hop to it.

Politics

Earlier this month, Politico ran a profile of Sam Bankman-Fried, the 30-year-old founder and CEO of the cryptocurrency exchange FTX, who’s quickly become one of the largest megadonors in Democratic politics. Federal Elections Commission filings tell us he’s already spent more than $40 million on campaigns this year, most of it on primaries. And he’s out to spend more — “anywhere from $100 million to $1 billion,” perhaps, during the 2024 cycle:

“We’ve never seen something like this on this scale,” said Bradley Beychok, co-founder of American Bridge 21st Century, a Democratic super PAC. “On our side, there’s a small pool of people who write these kinds of checks and they tend to be the same folks. But Sam, to his credit, came right in with a big splash.” In 2022, Beychok continued, “he could make a big difference for Democratic candidates — if he wants.”

But it’s not clear if that’s what Bankman-Fried wants. Even though he spent about $40 million on campaigns this year, according to publicly disclosed filings with the Federal Election Commission, he told POLITICO that he plans to only spend about half that amount during the general election, when the Democratic Party faces losing its congressional majorities. He’s more motivated by promoting new candidates in safe seats than the battleground combat that decides partisan control in Washington. While his biggest spending has been on Democratic primaries, he has also contributed directly to Democratic and Republican campaigns alike.

And though Bankman-Fried has what it takes to be the biggest donor in politics — an eleven-figure bank account he’s committed to giving away before he dies, as well as some very specific ideas about what needs fixing and how — he is only now explaining the unconventional path he plans to cut through the world of Washington. It often comes with an enormous caveat: “It depends.”

That “unconventional path” is being shaped by a school and movement in ethical philosophy called “effective altruism”— the piece goes on to describe it as “maximizing good through a data-driven framework,” which seems like a fair gloss. Concretely for Bankman-Fried, that’s meant backing candidates who support increasing funding for pandemic research.

Effective altruism’s having a bit of a moment in the press right now and Bankman-Fried’s only partially responsible. The philosopher William MacAskill, one of the movement’s leading figures, has a new book out that’s been getting good reviews, and he’s the subject of a fascinating recent profile in The New Yorker. Dylan Matthews, who’s been covering and involved in the effective altruism community for years now, wrote a long piece on it for Vox earlier this month:

The term “effective altruism,” and the movement as a whole, can be traced to a small group of people based at Oxford University about 12 years ago.

In November 2009, two philosophers at the university, Toby Ord and Will MacAskill, started a group called Giving What We Can, which promoted a pledge whose takers commit to donating 10 percent of their income to effective charities every year (several Voxxers, including me, have signed the pledge).

In 2011, MacAskill and Oxford student Ben Todd co-founded a similar group called 80,000 Hours, which meant to complement Giving What We Can’s focus on how to give most effectively with a focus on how to choose careers where one can do a lot of good. Later in 2011, Giving What We Can and 80,000 Hours wanted to incorporate as a formal charity, and needed a name. About 17 people involved in the group, per MacAskill’s recollection, voted on various names, like the “Rational Altruist Community” or the “Evidence-based Charity Association.”

The winner was “Centre for Effective Altruism.” This was the first time the term took on broad usage to refer to this constellation of ideas.

The movement blended a few major intellectual sources. The first, unsurprisingly, came from philosophy. Over decades, Peter Singer and Peter Unger had developed an argument that people in rich countries are morally obligated to donate a large share of their income to help people in poorer countries. Singer memorably analogized declining to donate large shares of your income to charity to letting a child drowning in a pond die because you don’t want to muddy your clothes rescuing him. Hoarding wealth rather than donating it to the world’s poorest, as Unger put it, amounts to “living high and letting die.” Altruism, in other words, wasn’t an option for a good life — it was an obligation.

As the MacAskill profile in The New Yorker relates, effective altruism’s adherents lived out that obligation through a kind of personal asceticism until recently; the piece is dotted with moments where MacAskill and others consider the ethics of spending on things like beer and braces with money that might be put to more noble use. But things have changed — effective altruism organizations now have nearly $30 billion to spend thanks to the support of wealthy donors like Bankman-Fried who, it should be said, makes his billions in an almost comically wasteful industry and is working to a secure a favorable regulatory environment for it in Washington. Matthews addresses this directly in another recent piece — “crypto is, let’s be blunt, a completely useless asset class that has serious environmental costs,” he writes — but goes on to defend the new, moneyed effective altruism from the charge that philanthropy sanctifies and cements the power of the wealthy:

In this view, billionaire philanthropy represents an act whereby the rich shape the society around them without deference to the wishes of the mass of that society. This critique clearly applies to EA, just as it applies to David Koch and David Geffen’s $100 million gifts to Lincoln Center to get their names on venues, or to Nike founder Phil Knight’s $400 million gift to Stanford to get a Rhodes Scholarship-type program named after himself. All these are mass reallocations of resources done without democratic input.

So, they’re undemocratic. Now what? There are billionaires committing their fortunes, right now, to philanthropic causes. If that’s politically unjust, we should identify what they should be doing instead.

One option would be for billionaire donors, from Geffen and Knight to Tuna/Moskovitz and Bankman-Fried, to simply donate their money to the US treasury. As philosopher Richard Y. Chappell notes, this is a perfectly allowed option that enables the wealthy to put their spending decisions in the hands of Congress. (Such donations could only be used to pay off the public debt, but hey — it’s tax deductible.)

But one almost never hears people proposing this. When push comes to shove, critics of big philanthropy usually don’t want to commit themselves to the view that slightly reducing the federal budget deficit is morally or politically preferable to letting billionaires donate to other causes.

Another option would be for billionaires to shift to consumption: go on even more vacations, stay at even nicer hotels, buy even more art for themselves, etc.

There are two points worth making about all this. First, the fact that people rarely ask that philanthropists turn their money over to the government isn’t an argument against it. And really, you can derive the idea that most philanthropists would be doing about as much or more good if they did from the premise of “effective” altruism — that most philanthropic efforts are largely or totally ineffective, wasteful, or even counterproductive. If that’s the case, even if you grant that the effective altruists know what causes are objectively best to give to — you shouldn’t, but set that aside for a moment — just giving to the government seems like a solid secondary option, even if it just goes towards reducing the deficit slightly, which might help widen the political space for domestic social spending or even international aid.

The second point is that people with money to burn actually do have outlets to choose from beyond luxuries, government, charitable giving, or, in Bankman-Fried’s case, campaign spending. Billionaires could create and give to a charitable fund run directly by the public, for instance. Or they can fund labor organizing and issue campaigns aimed at reducing inequality and democratizing the global economy, which would help move the least of us beyond needing the charity of others in the first place.

The wealthy aren't into these ideas, of course. And part of the issue, even amongst the rich effective altruists, is that most don’t really believe they have more money than they should as a matter of political economy or even ethics. It’s telling that, as the MacAskill profile notes, effective altruists have recommended getting jobs in finance and donating surplus income. The whole project is to channel unequally distributed wealth through individuals kind and intelligent enough to use it properly. While the left hopes to cut out the middleman between the wealth the economy produces and the masses who produce and need it, the effective altruists think they can create a class of middlemen with more wisdom and sobriety than the rest of us. As I’ve said, we have good reasons to doubt this.

One of them is the rise, within the effective altruism community, of a perspective they call “long-termism,” which, as best as I can tell, is rooted in two radical, earth-shaking propositions. The first is that we should care about the future consequences of our actions. The second is that we should avoid human extinction.

Those ideas might seem commonsensical at first blush — those are the intuitions animating the climate movement, for instance. As it happens, though, prominent figures in the long-termist camp have moved climate change a few slots down their list of concerns. It’s a boogeyman for simpletons relative to the real long-run threats we ought to be worried about — like, for instance, an evil or confused artificial supercomputer wiping out the human race. The New Yorker:

A well-intentioned A.I. might, as in one of Bostrom’s famous thought experiments, turn a directive to make paper clips into an effort to do so with all available atoms. Ord imagines a power-hungry superintelligence distributing thousands of copies of itself around the world, using this botnet to win financial resources, and gaining dominion “by manipulating the leaders of major world powers (blackmail, or the promise of future power); or by having the humans under its control use weapons of mass destruction to cripple the rest of humanity.” These risks might have a probability close to zero, but a negligible possibility times a catastrophic outcome is still very bad; significant action is now of paramount concern. “We can state with confidence that humanity spends more on ice cream every year than on ensuring that the technologies we develop do not destroy us,” Ord writes. These ideas were grouped together under the new heading of “longtermism.”

I’m going to go ahead and put my chips down here. Predictive accountability does matter to me as a pundit and a writer; rest assured that the commitment I’m about to make is being made in earnest. I am willing to bet all of my possessions to any takers that an artificial superintelligence will not enslave or destroy humanity. If it comes to pass and I’m still alive, find me in one of the robot gulags. I’ll hand over whatever I happen to have on me.

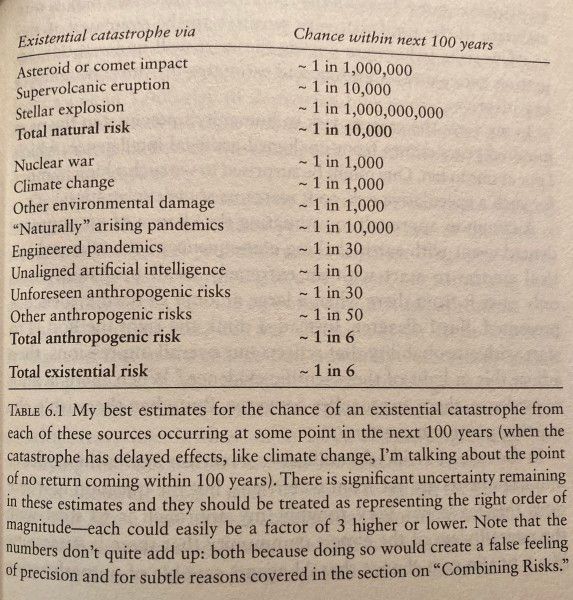

Those considering taking me up on this might find this chart of probabilities in Ord’s book helpful:

There is a disclaimer, though. “When presented in a scientific context, numerical estimates can strike people as having an unwarranted appearance of precision or objectivity,” Ord writes. “Don’t take these numbers to be completely objective. Even with a risk as well characterized as asteroid impacts, the scientific evidence only takes us part of the way: we have good evidence regarding the chance of impact, but not of the chance a given impact will destroy our future. And don’t take the estimates to be precise. Their purpose is to show the right order of magnitude, rather than a more precise probability.”

The blogger Miles Raymer puzzles over this passage in an otherwise effusive review of the book:

Readers familiar with or sympathetic to Ord’s methodology will see this for what it is: a responsible writer’s attempt to stave off misinterpretation and not convey an unjustified sense of certainty. But one part of me reads this and wonders, “If your numbers are so damned subjective and imprecise, why include them at all?” I suspect I’m not the only person who reacted that way. Unfortunately, this objection can be bolstered by Ord’s lack of transparency regarding how some of his estimates were reached. He includes helpful statistical summaries for all the natural [existential risks,] but I couldn’t find anything similar for the anthropogenic or future ones––not even in the appendices. Why is [existential risk] for unaligned AI determined to be 1 in 10 instead of 1 in 15, or 1 in 20? Is this because such calculations don’t exist, or perhaps because Ord decided not to share them? Either way, their absence leaves a space open to question just how willing we should be to take Ord’s word for it.

We shouldn’t be. As you’ve surely grasped, I think the idea that we’re going to create a sentient, wilful, and uncontrollable AI powerful enough to destroy us and mechanically sustain itself without us someday is ludicrous. Every time I look into what all that fuss is about, I’m amazed anew at how vague and speculative the concerns seem to be. But even if you grant that there are risks to the development of AI, and there are, those risks are public problems that the public ought to have a role in debating and devising solutions to. What we have instead with effective altruism in its current state is an ethical perspective that amounts to another set of justifications, as though they needed more, for privileging the judgements of elites, even when those judgements seem highly suspect. MacAskill seems like a decent guy; I don’t think he can be faulted for the way he’s chosen to live his life. That said, the idea, which he defends in his book, that nuclear armageddon might not spell the end of humanity if it ever happens, raises a few questions about how soundly this whole body of thought has been constructed. From Scott Alexander’s review of his book:

Suppose that some catastrophe “merely” kills 99% of humans. Could the rest survive and rebuild civilization? MacAskill thinks yes, partly because of the indomitable human spirit:

"[Someone might guess that] even today, [Hiroshima] would be a nuclear wasteland…but nothing could be further from the truth. Despite the enormous loss of life and destruction of infrastructure, power was restored to some areas within a day, to 30% of homes within two weeks, and to all homes not destroyed by the blast within four months. There was a limited rail service running the day after the attack, there was a streetcar line running within three days, water pumps were working again within four days, and telecommunications were restored in some areas within a month. The Bank of Japan, just 380 metres from the hypocenter of the blast, reopened within just two days."

…but also for good factual reasons. For example, it would be much easier to reinvent agriculture the second time around, because we would have seeds of our current highly-optimized crops, plus even if knowledgeable farmers didn’t survive we would at least know agriculture was possible.

The assumption that survivors in indeterminate locations with indeterminate technical capacity and know-how in a ravaged world would have the skills and presence of mind to reinvent agriculture afresh before dying of environmental causes or by their own hands — I mean, we’re really just daydreaming here. There’s not much distance between this and arguing whether Batman could take Superman in a fight. But trawl the tech-adjacent blogs enough and you’ll find people discussing these kinds of things seriously.

Anyway, when MacAskill isn’t thinking through the survivability of nuclear winter, he’s pinging donors who’ve nominally pledged to give, like Bankman-Fried, for money. The New Yorker:

FTX spent an estimated twenty million dollars on an ad campaign featuring Tom Brady and Gisele Bündchen, and bought the naming rights to the Miami Heat’s arena for a hundred and thirty-five million dollars. Last year, MacAskill contacted Bankman-Fried to check in about his promise: “Someone gets very rich and, it’s, like, O.K., remember the altruism side? I called him and said, ‘So, still planning to donate?’ ”

It appears that even billionaires who’ve read Derek Parfit can have trouble being ethical. And as I’ve suggested previously, the fundamental questions before us when it comes to inequality — and this confuses people on the left, too — aren’t really about whether someone with a spare billion to spend should buy mansions, give it away, or shove it under a very large mattress. They’re about why the economy gives such people more than they know what to do with in the first place and whether the disadvantaged might be helped more by different economic arrangements than by the benevolence and empathy of people who clawed their way to riches.

What’s more, I think I’m a long-termist in the sense that I do think existential risks are important and worth shoring ourselves against. And I think future generations contending with climate change, pandemics, evil computers, or any other large-scale problems are probably going to find having strong and stable political institutions useful. I don’t know if Bankman-Fried disagrees. But he is giving some of his money to Republicans.

A Song

“100% Inheritance Tax” - Downtown Boys (2015)

Bye.

Nwanevu. Newsletter

Join the newsletter to receive the latest updates in your inbox.